1. 概述

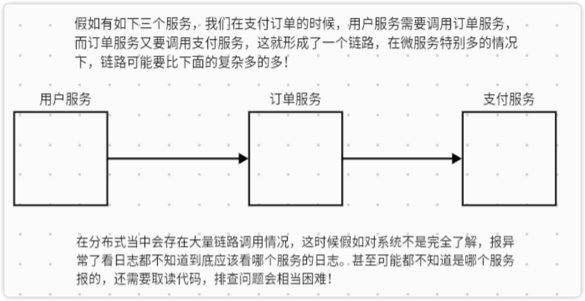

1.1 分布式系统面临的问题

在微服务框架中,一个由客户端发起的请求在后端系统经过多个不同的服务节点调用来协同产生最后的请求结果,每一次请求都会形成一条复杂的分布式服务调用链路,链路中的任何一环出现高延时或异常都会引起整个请求最后的失败

1.2 Sleuth 是什么

官网:https://docs.spring.io/spring-cloud-sleuth/docs/current/reference/html/

Spring Cloud Sleuth提供了一套完整的服务跟踪的解决方案,将服务与服务之间的调用记录起来,可以快速的知道调用某个服务涉及到哪些服务,方便快速排查问题!

具体功能如下几点:

将跟踪和跨度ID添加到Slf4j,因此可以从日志聚合起中的给定跟踪或跨度中提取所有日志

检测来自Spring应用程序的公共入口和出口点(

Servlet filter、Rest template、Scheduled actions、message channels、feign clinet)如果

spring-cloud-sleuth-zipkin可用,则应用程序将通过HTTP生成和报告与Zipkin兼容的跟踪,默认情况下,它将他们发送到localhost(端口9411)上的Zipkin收集器服务。使用spring.zipkin.baseUrl配置Zipkin服务的地址

1.3 Zipkin

Spring cloud Sleuth 对于分布式链路的跟踪仅仅时生成一些数据,这些数据不便于人阅读,所以一般把这种跟踪数据上传给Zipkin Server,由Zipkin通过UI界面统一进行数据的展示.

1.4 链路监控相关术语

- span(跨度):工作的基本单位。例如,发送 RPC 是一个新的跨度,发送响应到 RPC 也是如此。Span还有其他数据,例如描述、时间戳事件、键值注释(标签)、以及它们的 Span 的 ID 和进程 ID(通常是 IP 地址)。跨度可以启动和停止,并且它们会跟踪它们的时间信息。创建跨度后,您必须在将来的某个时间点停止它

- Trace: 一组跨度形成树状结构

- Annotation/Event: 用于及时记录某个事件的存在,有如下事件类型

- cs: 客户端发送。客户已提出请求。此注释指示跨度的开始

- sr(Server Received): 服务器端收到请求并开始处理它。cs从此时间戳中减去时间戳揭示了网络延迟。

- ss: 服务器发送。在请求处理完成时注释(当响应被发送回客户端时)。从这个时间戳中减去sr时间戳,可以看出服务器端处理请求所需的时间

- cr: 客户收到。表示跨度的结束。客户端已成功收到服务器端的响应。cs从这个时间戳中减去时间戳,可以看出客户端从服务器接收响应所需的全部时间。

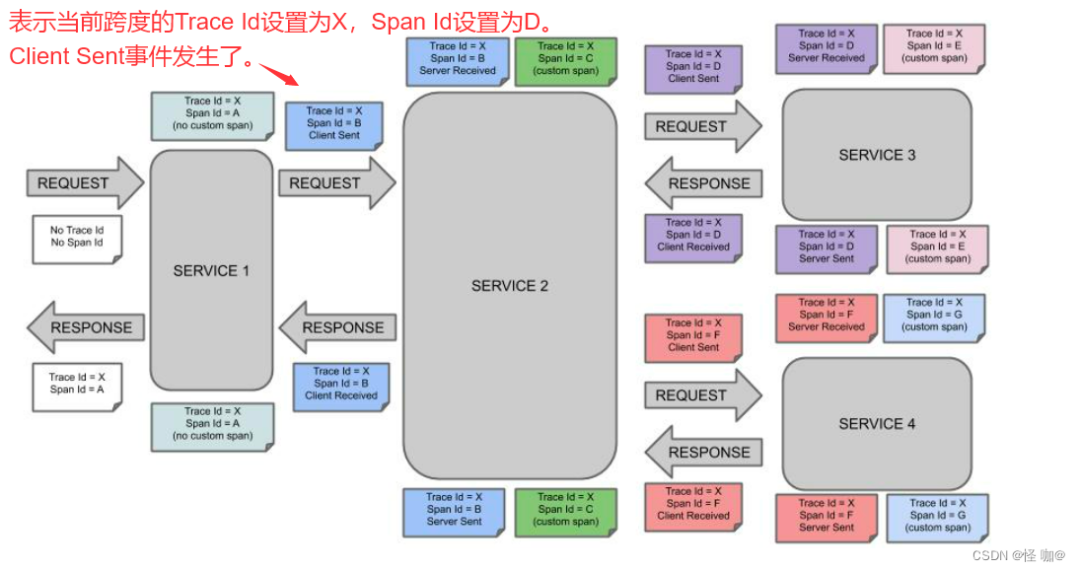

下图显示了Span 和Trace在系统中的外观

下图显示了Span的父子关系的外观:

可以继续创建跨度(带有no custom span指示的示例),也可以手动创建子跨度(带有custom span指示的示例)

1.5 Demo示例

引入依赖

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35<properties>

<maven.compiler.source>8</maven.compiler.source>

<maven.compiler.target>8</maven.compiler.target>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<springboot.version>2.6.8</springboot.version>

<springcloud.version>2021.0.3</springcloud.version>

</properties>

<dependencyManagement>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-dependencies</artifactId>

<version>${springboot.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-dependencies</artifactId>

<version>${springcloud.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

</dependencies>

</dependencyManagement>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-sleuth</artifactId>

</dependency>

</dependencies>添加yml配置

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19server:

port: 8080

spring:

sleuth:

sampler:

#日志全量采集

probability: 1.0

application:

name: sleuth-demo

#配置日志级别

logging:

level:

org:

springframework:

web:

servlet:

DispatcherServlet: DEBUG

- 添加Controller

1

2

3

4

5

6

7

8

9

10

11

12

13/**

* @author xiaoyuge

*/

public class ExampleController {

private static final Logger logger = LoggerFactory.getLogger(ExampleController.class);

public String index() {

logger.info("hello world");

return "hello world";

}

} - 启动并访问

localhost:8080,然后查看日志Tips:1

2

3

4

5

6

7

82023-07-15 09:32:51.177 INFO [sleuth-demo,92f966e849750cc3,92f966e849750cc3] 81138 --- [nio-8080-exec-1] o.s.web.servlet.DispatcherServlet : Completed initialization in 1 ms

2023-07-15 09:32:51.187 DEBUG [sleuth-demo,92f966e849750cc3,92f966e849750cc3] 81138 --- [nio-8080-exec-1] o.s.web.servlet.DispatcherServlet : GET "/", parameters={}

2023-07-15 09:32:51.204 INFO [sleuth-demo,92f966e849750cc3,92f966e849750cc3] 81138 --- [nio-8080-exec-1] org.example.ExampleController : hello world

2023-07-15 09:32:51.230 DEBUG [sleuth-demo,92f966e849750cc3,92f966e849750cc3] 81138 --- [nio-8080-exec-1] o.s.web.servlet.DispatcherServlet : Completed 200 OK

2023-07-15 09:32:51.280 DEBUG [sleuth-demo,15df1d4c4021e661,15df1d4c4021e661] 81138 --- [nio-8080-exec-2] o.s.web.servlet.DispatcherServlet : GET "/favicon.ico", parameters={}

2023-07-15 09:32:51.288 DEBUG [sleuth-demo,15df1d4c4021e661,15df1d4c4021e661] 81138 --- [nio-8080-exec-2] o.s.web.servlet.DispatcherServlet : Completed 404 NOT_FOUND

2023-07-15 09:32:51.292 DEBUG [sleuth-demo,15df1d4c4021e661,15df1d4c4021e661] 81138 --- [nio-8080-exec-2] o.s.web.servlet.DispatcherServlet : "ERROR" dispatch for GET "/error", parameters={}

2023-07-15 09:32:51.349 DEBUG [sleuth-demo,15df1d4c4021e661,15df1d4c4021e661] 81138 --- [nio-8080-exec-2] o.s.web.servlet.DispatcherServlet : Exiting from "ERROR" dispatch, status 404[sleuth-demo,92f966e849750cc3,92f966e849750cc3]对应[application name,trace id, span id]

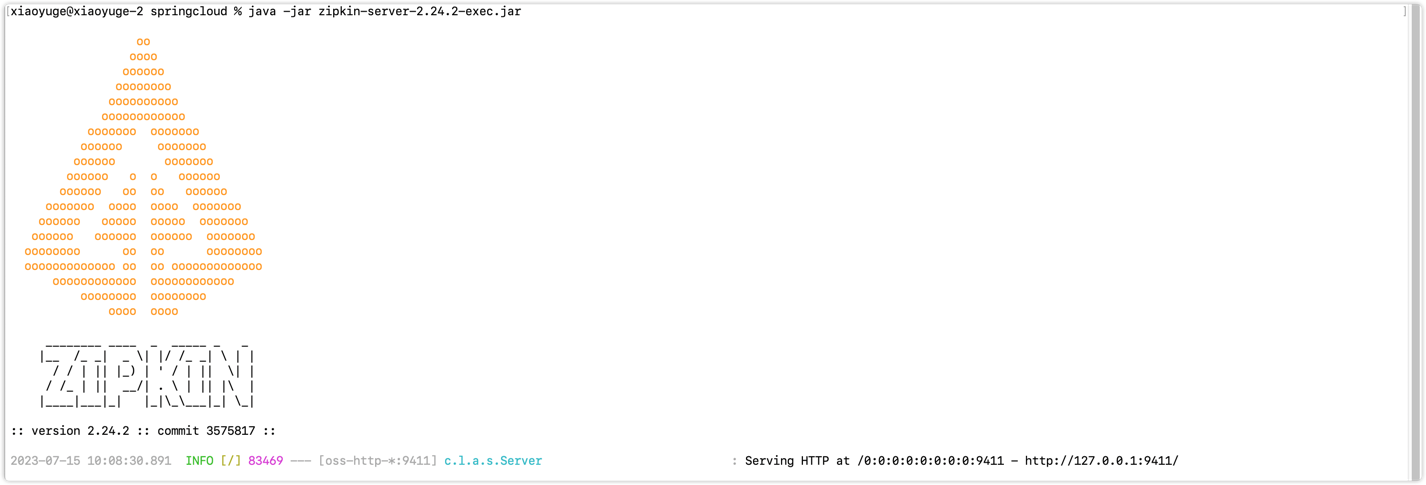

2. Zipkin下载与启动

下载最新版本2.24.2

request 1

https://repo1.maven.org/maven2/io/zipkin/zipkin-server/2.24.2/zipkin-server-2.24.2-exec.jar

Zipkin官网

request 1

https://zipkin.io/pages/quickstart.html

Zipkin的github地址

request 1

https://github.com/openzipkin/zipkin

下载jar 后直接javar -jar xxx.jar启动:

访问:http://localhost:9411/zipkin/

2.1 搭建链路监控

首先至少准备两个服务,并且是调用关系,这样才可以通过zipkin来查看调用链。

消费者

sleuth-consume:端口8081生产者

sleuth-provider:端口8082远程调用使用的是

openfeign

- 创建项目

sleuth-zipkin-demo

- 引入依赖

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25<properties>

<maven.compiler.source>8</maven.compiler.source>

<maven.compiler.target>8</maven.compiler.target>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<springboot.version>2.6.8</springboot.version>

<springcloud.version>2021.0.3</springcloud.version>

</properties>

<dependencyManagement>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-dependencies</artifactId>

<version>${springboot.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-dependencies</artifactId>

<version>${springcloud.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

</dependencies>

</dependencyManagement>

接下来创建两个模块:sleuth-provider和sleuth-consome

2.1.1 sleuth-provider

添加pom依赖

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21<parent>

<artifactId>sleuth-zipkin-demo</artifactId>

<groupId>org.example</groupId>

<version>1.0-SNAPSHOT</version>

</parent>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-zipkin</artifactId>

<version>2.2.8.RELEASE</version>

</dependency>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-openfeign</artifactId>

</dependency>

</dependencies>有的springcloud版本当中没有对

spring-cloud-starter-zipkin进行版本控制的,所以需要声明版本,目前2.2.8.RELEASE是最新版本,spring-cloud-starter-zipkin依赖集成了spring-cloud-starter-sleuth依赖,所以不用再引用sleuth添加yml配置

1

2

3

4

5

6

7

8

9

10server:

port: 8082

spring:

application:

name: sleuth-provider

#设置日志级别

logging:

level:

org.springframework.web.servlet.DispatcherServlet: DEBUG添加Controller,测试调用链路

1

2

3

4

5

6

7

8

9

10

11/**

* @author xiaoyuge

*/

public class ProviderController {

public String get() {

return "调用 provider接口";

}

}启用应用,多次访问:http://localhost:8092/provider 查看日志

1

2

3

4

5

6

7

8

9

10

11

12

13

14

152023-07-15 10:50:39.192 INFO [sleuth-provider,0279711252ab628e,0279711252ab628e] 86229 --- [nio-8082-exec-1] o.a.c.c.C.[Tomcat].[localhost].[/] : Initializing Spring DispatcherServlet 'dispatcherServlet'

2023-07-15 10:50:39.192 INFO [sleuth-provider,0279711252ab628e,0279711252ab628e] 86229 --- [nio-8082-exec-1] o.s.web.servlet.DispatcherServlet : Initializing Servlet 'dispatcherServlet'

2023-07-15 10:50:39.192 DEBUG [sleuth-provider,0279711252ab628e,0279711252ab628e] 86229 --- [nio-8082-exec-1] o.s.web.servlet.DispatcherServlet : Detected StandardServletMultipartResolver

2023-07-15 10:50:39.192 DEBUG [sleuth-provider,0279711252ab628e,0279711252ab628e] 86229 --- [nio-8082-exec-1] o.s.web.servlet.DispatcherServlet : Detected AcceptHeaderLocaleResolver

2023-07-15 10:50:39.192 DEBUG [sleuth-provider,0279711252ab628e,0279711252ab628e] 86229 --- [nio-8082-exec-1] o.s.web.servlet.DispatcherServlet : Detected FixedThemeResolver

2023-07-15 10:50:39.193 DEBUG [sleuth-provider,0279711252ab628e,0279711252ab628e] 86229 --- [nio-8082-exec-1] o.s.web.servlet.DispatcherServlet : Detected org.springframework.web.servlet.view.DefaultRequestToViewNameTranslator@389901fa

2023-07-15 10:50:39.193 DEBUG [sleuth-provider,0279711252ab628e,0279711252ab628e] 86229 --- [nio-8082-exec-1] o.s.web.servlet.DispatcherServlet : Detected org.springframework.web.servlet.support.SessionFlashMapManager@25b365bd

2023-07-15 10:50:39.194 DEBUG [sleuth-provider,0279711252ab628e,0279711252ab628e] 86229 --- [nio-8082-exec-1] o.s.web.servlet.DispatcherServlet : enableLoggingRequestDetails='false': request parameters and headers will be masked to prevent unsafe logging of potentially sensitive data

2023-07-15 10:50:39.194 INFO [sleuth-provider,0279711252ab628e,0279711252ab628e] 86229 --- [nio-8082-exec-1] o.s.web.servlet.DispatcherServlet : Completed initialization in 2 ms

2023-07-15 10:50:39.201 DEBUG [sleuth-provider,0279711252ab628e,0279711252ab628e] 86229 --- [nio-8082-exec-1] o.s.web.servlet.DispatcherServlet : GET "/provider", parameters={}

2023-07-15 10:50:39.229 DEBUG [sleuth-provider,0279711252ab628e,0279711252ab628e] 86229 --- [nio-8082-exec-1] o.s.web.servlet.DispatcherServlet : Completed 200 OK

2023-07-15 10:50:39.276 DEBUG [sleuth-provider,c7e313833ab1389e,c7e313833ab1389e] 86229 --- [nio-8082-exec-2] o.s.web.servlet.DispatcherServlet : GET "/favicon.ico", parameters={}

2023-07-15 10:50:39.279 DEBUG [sleuth-provider,c7e313833ab1389e,c7e313833ab1389e] 86229 --- [nio-8082-exec-2] o.s.web.servlet.DispatcherServlet : Completed 404 NOT_FOUND

2023-07-15 10:50:39.281 DEBUG [sleuth-provider,c7e313833ab1389e,c7e313833ab1389e] 86229 --- [nio-8082-exec-2] o.s.web.servlet.DispatcherServlet : "ERROR" dispatch for GET "/error", parameters={}

2023-07-15 10:50:39.324 DEBUG [sleuth-provider,c7e313833ab1389e,c7e313833ab1389e] 86229 --- [nio-8082-exec-2] o.s.web.servlet.DispatcherServlet : Exiting from "ERROR" dispatch, status 404注意:

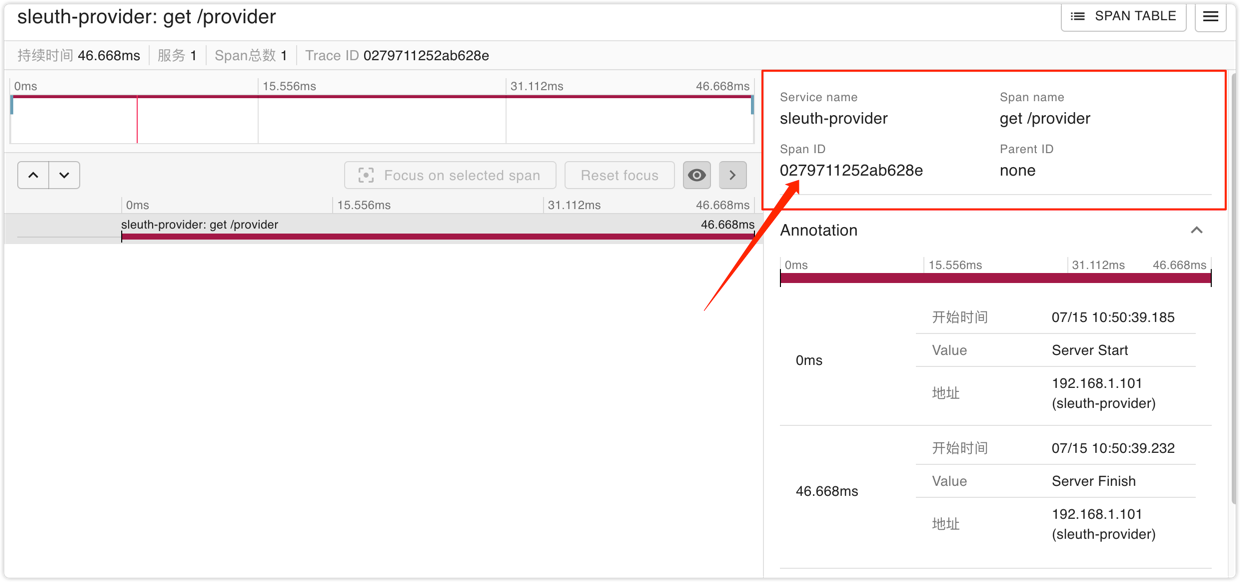

0279711252ab628e为TraceId,后面要用

打开Zipkin界面,根据上面的TraceId搜索

2.1.2 sleuth-consume

添加依赖

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25<parent>

<artifactId>sleuth-zipkin-demo</artifactId>

<groupId>org.example</groupId>

<version>1.0-SNAPSHOT</version>

</parent>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-zipkin</artifactId>

<version>2.2.8.RELEASE</version>

</dependency>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-openfeign</artifactId>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-api</artifactId>

</dependency>

</dependencies>添加配置

1

2

3

4

5

6

7

8

9

10server:

port: 8081

spring:

application:

name: sleuth-consume

#设置日志级别

logging:

level:

org.springframework.web.servlet.DispatcherServlet: DEBUG启动类添加

@EnableFeignClients1

2

3

4

5

6

7

public class ConsumeApplication {

public static void main(String[] args) {

SpringApplication.run(ConsumeApplication.class, args);

}

}添加远程调用接口

1

2

3

4

5

6

7

8

9/**

* @author xiaoyuge

*/

public interface FeignService {

String get();

}添加Controller

1

2

3

4

5

6

7

8

9

10

11

public class ExampleController {

private FeignService feignService;

public String home() {

return feignService.get();

}

}

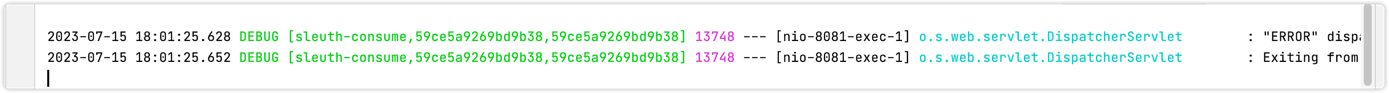

2.1.3 访问服务

访问:http://localhost:8081

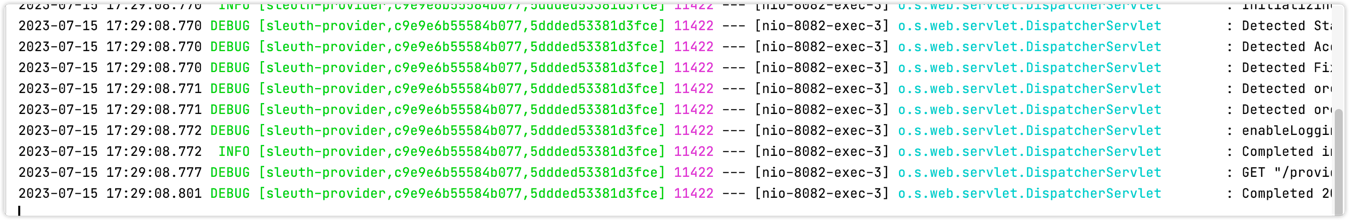

sleuth-consume服务日志

sleuth-provider服务日志

观察两个服务的日志:会发现Trace Id是一致的,原因是sleuth-consume调用了sleuth-provider的服务,属于同一个链路的,这一切并不是zipkin帮我们实现的,而是sleuth。

可以将zipkin依赖改成sleuth依赖,然后执行链路,trace id仍然是一样的,zipkin可以把它当成一个可视化界面的jar包,项目中集成zipkin依赖主要是将sleuth链路跟踪数据上传到zipkin,由zipkin对数据进行整理并在页面上展示出来,真正实现链路跟踪的是sleuth

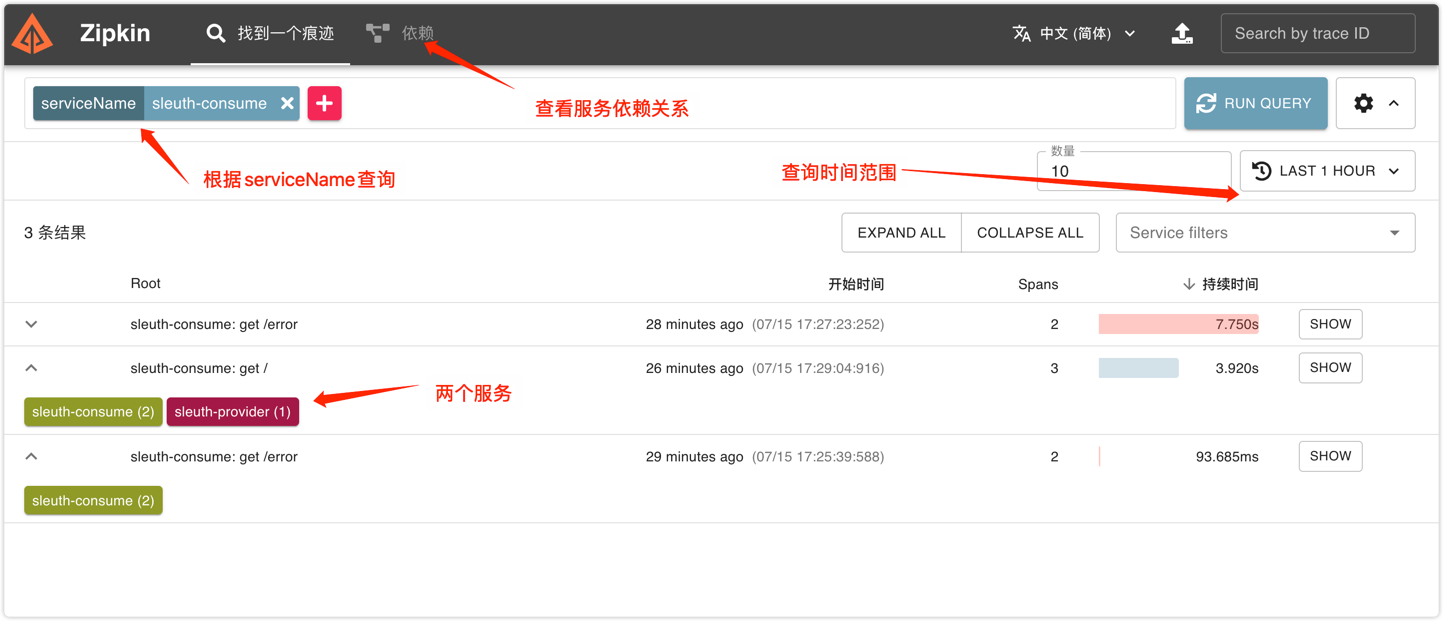

然后再观察一下zipkin:

2.1.4 测试异常

在服务提供者的接口上制造异常,如下:

1 |

|

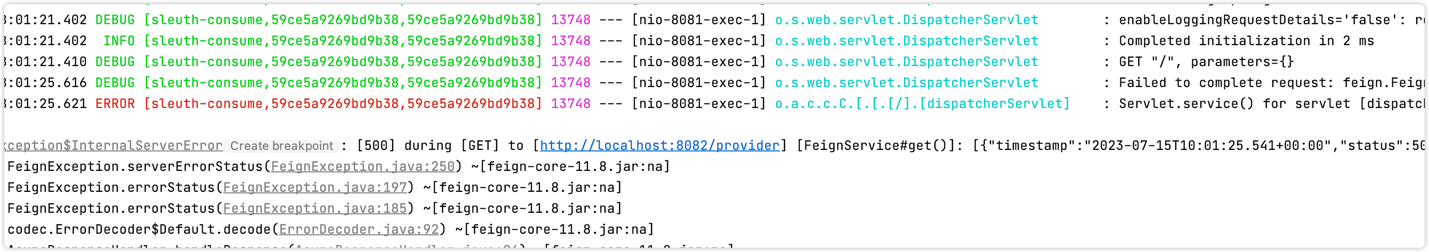

再次访问:http://localhost:8081,获取Trace Id ,然后去zipkin根据traceId快速定位bug原因

然后查看链路详情:通过下图可以很直观的看到是由sleuth-consume调用sleuth-provider服务时,sleuth-provider服务接口发生了异常

假如不使用Sleuth,在我们进行远程调用的时候报错了,实际上我们是看不到他是因为什么报错的,我们只知道调用他报错了,日志如下,要想真正知道报的什么异常还得去查看远程调用的服务日志,而且还得比对服务调用时间,来查看当时报错的日志。

2.2 Sleuth相关配置

默认情况下,它将日志发送到localhost:9411上的zipkin收集器服务,假设我们的微服务并不是都部署在一台机器上,,那么我们就需要使用spring.zipkin.baseUrl配置zipkin服务的位置

1 | server: |

关于sleuth其他相关配置属性,可以查看【官网 】:

2.3 向Zipkin发送消息的方式

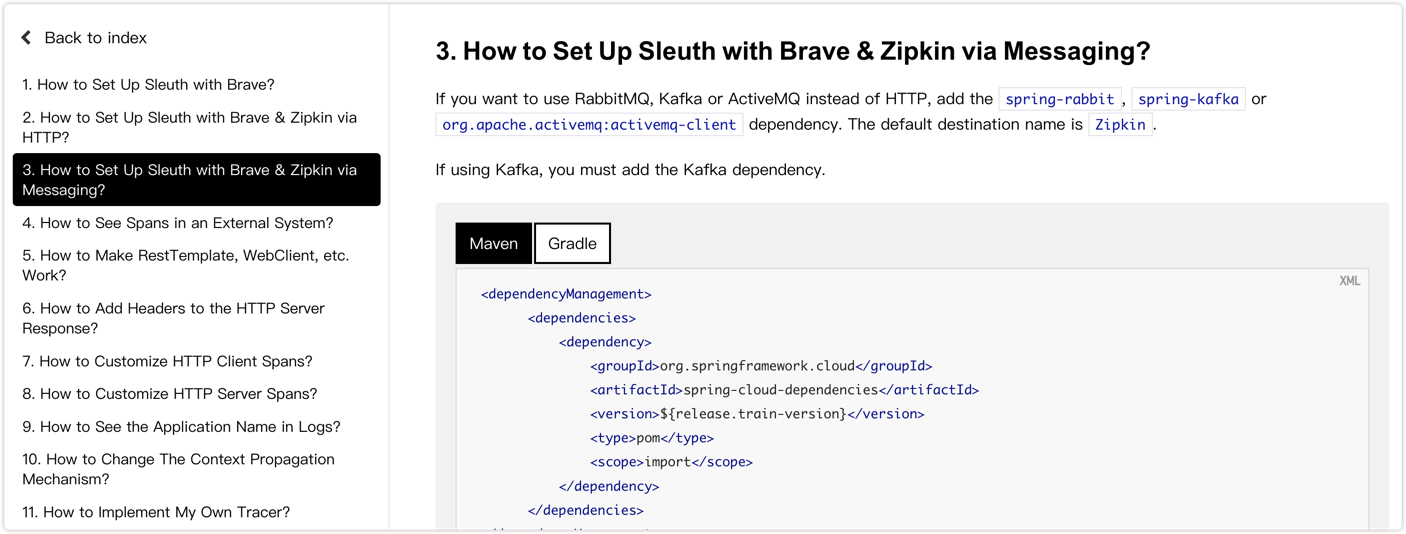

官网:

1 | https://docs.spring.io/spring-cloud-sleuth/docs/current/reference/html/howto.html#howto |

zipkin展示的数据实际上是由服务发送给zipkin,然后展示出来的,默认采用的是HTTP请求方式来向Zipkin发送,

在实际开发当中HTTP请求方式,有时候势必会给我们服务器带来一些压力,并发量特别大的情况下,会占用大量线程。HTTP请求讲究的是,我发送给你,然后并且收到你的消息回复,这条连接才算结束。

所以基于这一点zipkin也给我们提供了可以通过消息中间件来进行发送,发送给消息中间件我们就不用管了,这样可以避免线程拥堵,目前支持RabbitMQ和Kafka、ActiveMQ!

添加rabbitmq依赖

1

2

3

4<dependency>

<groupId>org.springframework.amqp</groupId>

<artifactId>spring-rabbit</artifactId>

</dependency>添加rabbitmq配置

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23server:

port: 8081

spring:

zipkin:

base-url: http://localhost:9411 #指定zipkin地址

sender:

type: rabbit #使用rabbitmq向zipkin发送消息

rabbitmq:

host: localhost

port: 5672

username: xiaoyuge

password: 123456

listener:

direct:

retry: #配置重试策略

enabled: true

sleuth:

sampler:

#采集率介于0~1之间,1表示全部采集

probability: 1

#每秒采集的数量,默认是10,通过设置可以有效的避免消息过大

rate: 10修改zipkin启动命令

1

java -jar zipkin-server-2.24.2-exec.jar --RABBIT_ADDRESSES=127.0.0.1:5672 --RABBIT_USER=xiaoyuge --RABBIT_PASSWORD=123456

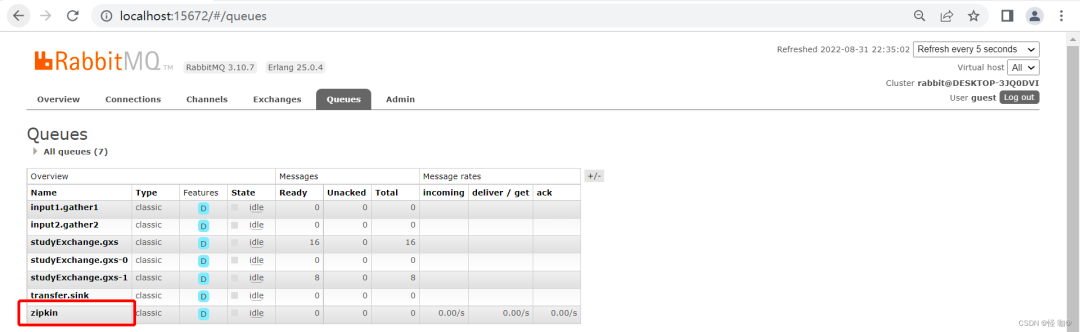

启动服务,访问接口

访问接口:

http://localhost:8081,zipkin就是自动创建的队列,通过这个队列发送消息的

打开zipkin仍然可以看到调用信息

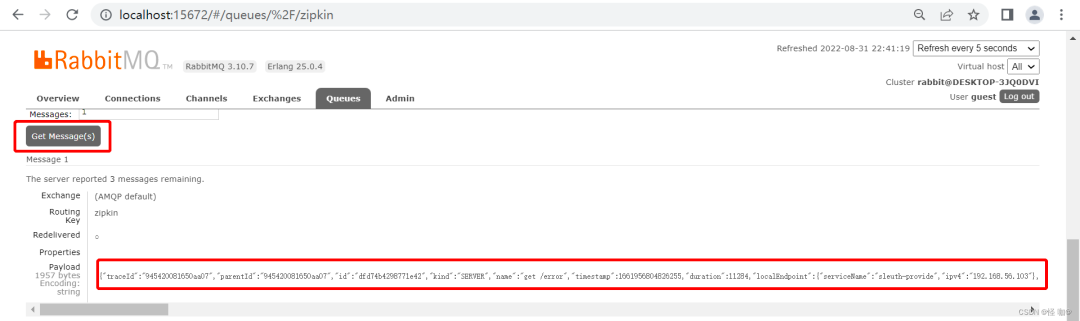

2.4 sleuth给zipkin发送的数据

Sleuth到底给zipkin发送了哪些数据,我们只需要将zipkin停止掉,然后调用我们的服务接口即可,这时候队列当中的消息没有人消费,然后就可以通过RabbitMQ管理页面获取消息

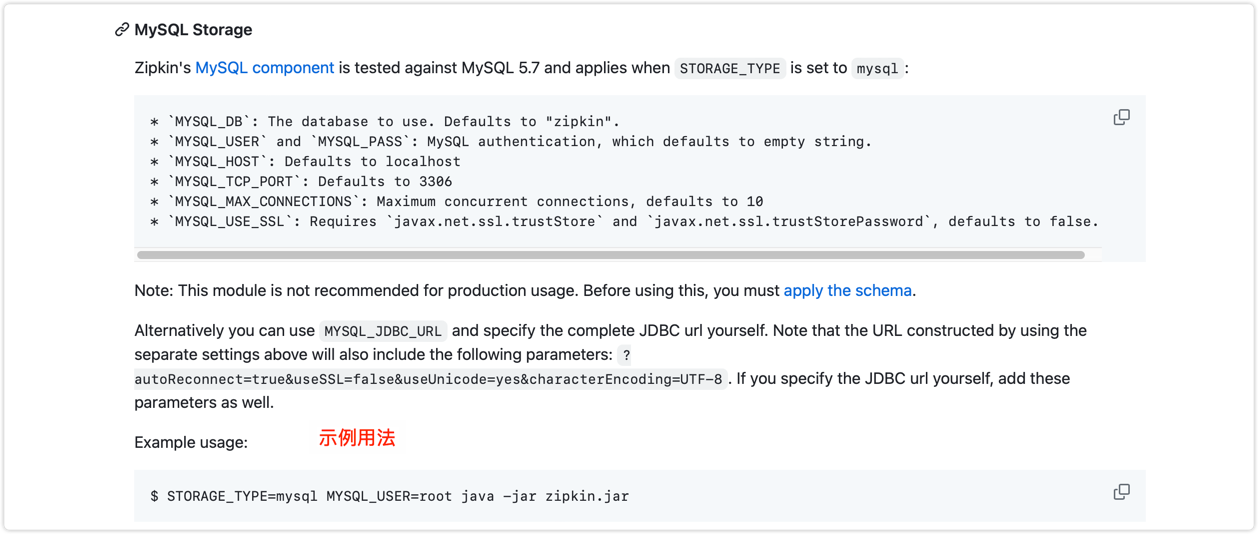

2.5 zipkin配置持久化

假如不配置zipkin持久化,当jar包停止后,所有搜集到的消息就会清除!针对于数据持久化zipkin提供了几种方式,常用的有两种:

存储到msyql

存储到Elasticsearch

2.5.1 持久化到mysql

sql文件

request 1

https://github.com/openzipkin/zipkin/blob/master/zipkin-storage/mysql-v1/src/main/resources/mysql.sql

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62--

-- Copyright 2015-2019 The OpenZipkin Authors

--

-- Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except

-- in compliance with the License. You may obtain a copy of the License at

--

-- http://www.apache.org/licenses/LICENSE-2.0

--

-- Unless required by applicable law or agreed to in writing, software distributed under the License

-- is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express

-- or implied. See the License for the specific language governing permissions and limitations under

-- the License.

--

CREATE TABLE IF NOT EXISTS zipkin_spans (

`trace_id_high` BIGINT NOT NULL DEFAULT 0 COMMENT 'If non zero, this means the trace uses 128 bit traceIds instead of 64 bit',

`trace_id` BIGINT NOT NULL,

`id` BIGINT NOT NULL,

`name` VARCHAR(255) NOT NULL,

`remote_service_name` VARCHAR(255),

`parent_id` BIGINT,

`debug` BIT(1),

`start_ts` BIGINT COMMENT 'Span.timestamp(): epoch micros used for endTs query and to implement TTL',

`duration` BIGINT COMMENT 'Span.duration(): micros used for minDuration and maxDuration query',

PRIMARY KEY (`trace_id_high`, `trace_id`, `id`)

) ENGINE=InnoDB ROW_FORMAT=COMPRESSED CHARACTER SET=utf8 COLLATE utf8_general_ci;

ALTER TABLE zipkin_spans ADD INDEX(`trace_id_high`, `trace_id`) COMMENT 'for getTracesByIds';

ALTER TABLE zipkin_spans ADD INDEX(`name`) COMMENT 'for getTraces and getSpanNames';

ALTER TABLE zipkin_spans ADD INDEX(`remote_service_name`) COMMENT 'for getTraces and getRemoteServiceNames';

ALTER TABLE zipkin_spans ADD INDEX(`start_ts`) COMMENT 'for getTraces ordering and range';

CREATE TABLE IF NOT EXISTS zipkin_annotations (

`trace_id_high` BIGINT NOT NULL DEFAULT 0 COMMENT 'If non zero, this means the trace uses 128 bit traceIds instead of 64 bit',

`trace_id` BIGINT NOT NULL COMMENT 'coincides with zipkin_spans.trace_id',

`span_id` BIGINT NOT NULL COMMENT 'coincides with zipkin_spans.id',

`a_key` VARCHAR(255) NOT NULL COMMENT 'BinaryAnnotation.key or Annotation.value if type == -1',

`a_value` BLOB COMMENT 'BinaryAnnotation.value(), which must be smaller than 64KB',

`a_type` INT NOT NULL COMMENT 'BinaryAnnotation.type() or -1 if Annotation',

`a_timestamp` BIGINT COMMENT 'Used to implement TTL; Annotation.timestamp or zipkin_spans.timestamp',

`endpoint_ipv4` INT COMMENT 'Null when Binary/Annotation.endpoint is null',

`endpoint_ipv6` BINARY(16) COMMENT 'Null when Binary/Annotation.endpoint is null, or no IPv6 address',

`endpoint_port` SMALLINT COMMENT 'Null when Binary/Annotation.endpoint is null',

`endpoint_service_name` VARCHAR(255) COMMENT 'Null when Binary/Annotation.endpoint is null'

) ENGINE=InnoDB ROW_FORMAT=COMPRESSED CHARACTER SET=utf8 COLLATE utf8_general_ci;

ALTER TABLE zipkin_annotations ADD UNIQUE KEY(`trace_id_high`, `trace_id`, `span_id`, `a_key`, `a_timestamp`) COMMENT 'Ignore insert on duplicate';

ALTER TABLE zipkin_annotations ADD INDEX(`trace_id_high`, `trace_id`, `span_id`) COMMENT 'for joining with zipkin_spans';

ALTER TABLE zipkin_annotations ADD INDEX(`trace_id_high`, `trace_id`) COMMENT 'for getTraces/ByIds';

ALTER TABLE zipkin_annotations ADD INDEX(`endpoint_service_name`) COMMENT 'for getTraces and getServiceNames';

ALTER TABLE zipkin_annotations ADD INDEX(`a_type`) COMMENT 'for getTraces and autocomplete values';

ALTER TABLE zipkin_annotations ADD INDEX(`a_key`) COMMENT 'for getTraces and autocomplete values';

ALTER TABLE zipkin_annotations ADD INDEX(`trace_id`, `span_id`, `a_key`) COMMENT 'for dependencies job';

CREATE TABLE IF NOT EXISTS zipkin_dependencies (

`day` DATE NOT NULL,

`parent` VARCHAR(255) NOT NULL,

`child` VARCHAR(255) NOT NULL,

`call_count` BIGINT,

`error_count` BIGINT,

PRIMARY KEY (`day`, `parent`, `child`)

) ENGINE=InnoDB ROW_FORMAT=COMPRESSED CHARACTER SET=utf8 COLLATE utf8_general_ci;官网配置

request 1

https://github.com/openzipkin/zipkin/tree/master/zipkin-server

配置持久化相当简单,只需要在mysql创建一个库和表,然后zipkin启动的时候指定持久化方式为mysql即可。

1 | java -jar zipkin-server-2.24.2-exec.jar --STORAGE_TYPE=mysql --MYSQL_HOST=127.0.0.1 --MYSQL_TCP_PORT=3306 --MYSQL_DB=zipkin --MYSQL_USER=xiaoyuge --MYSQL_PASS=123456 --RABBIT_ADDRESSES=127.0.0.1:5672 --RABBIT_USER=xiaoyuge --RABBIT_PASSWORD=123456 |

2.5.2 持久化到Elasticsearch

具体教程查看官网:https://github.com/openzipkin/zipkin/tree/master/zipkin-server

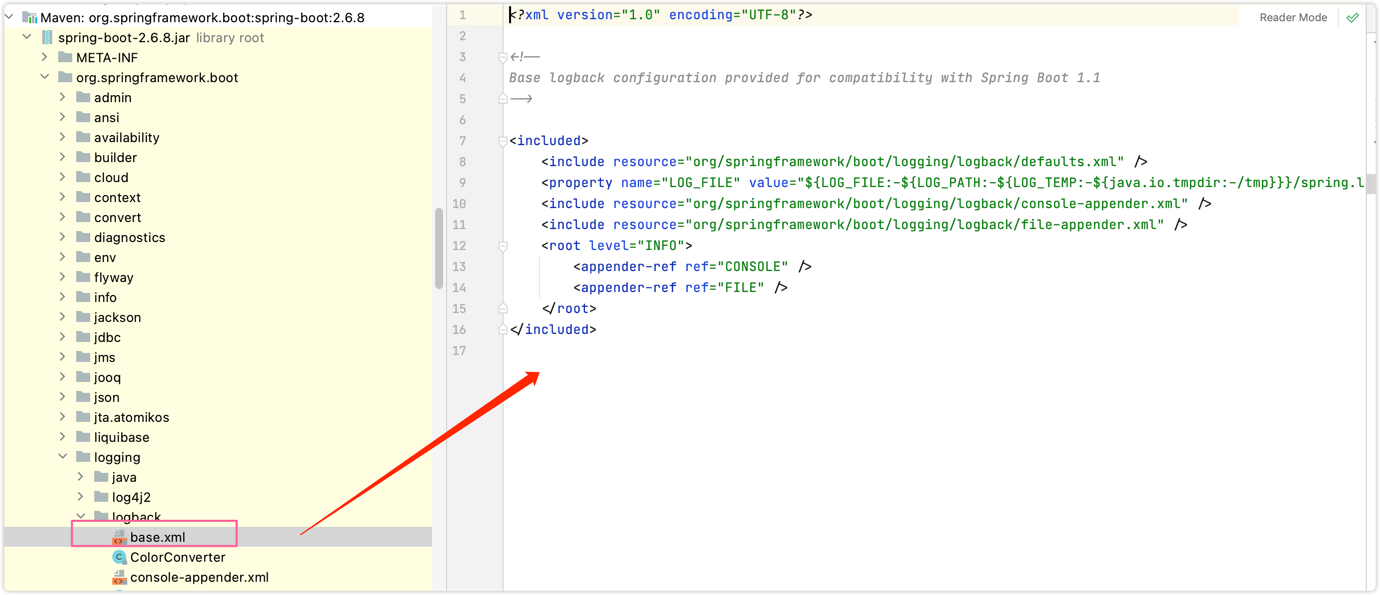

3. Sleuth怎么输出TraceId

首先我们要找到springboot默认的logback配置

源码如下:

1 |

|

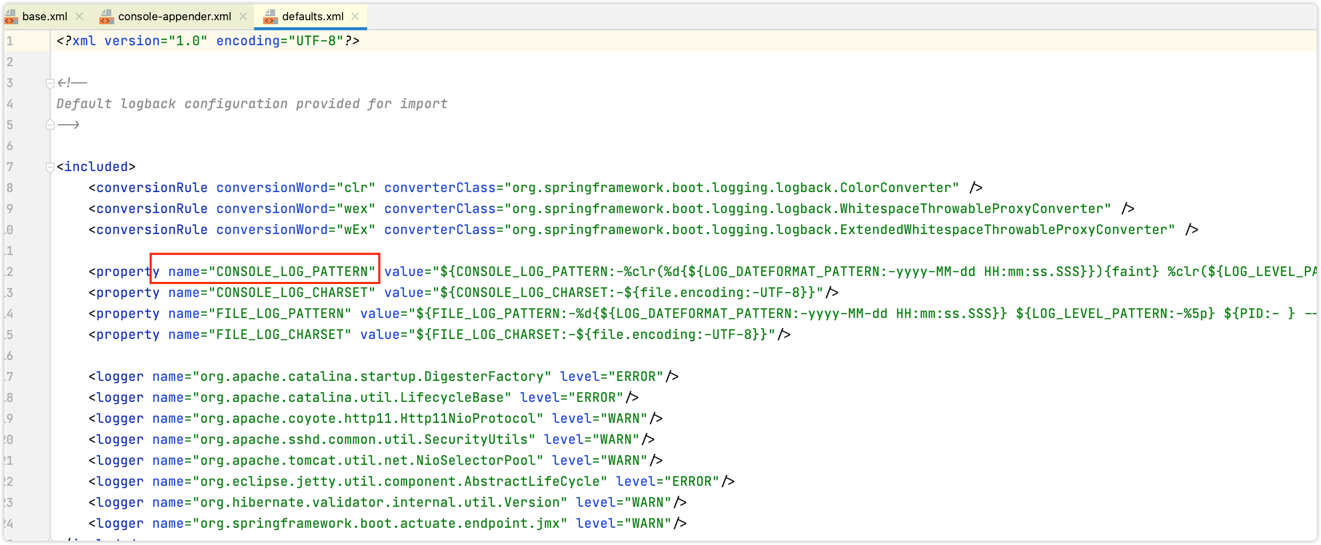

base.xml引用了console-appender.xml和file-appender.xml:

console-appender.xml文件1

2

3

4

5

6

7

8<included>

<appender name="CONSOLE" class="ch.qos.logback.core.ConsoleAppender">

<encoder>

<pattern>${CONSOLE_LOG_PATTERN}</pattern>

<charset>${CONSOLE_LOG_CHARSET}</charset>

</encoder>

</appender>

</included>

CONSOLE_LOG_PATTERN是springboot默认的日志格式:日志格式当中使用了一个CONSOLE_LOG_PATTERN变量

然后我们查看spring-cloud-sleuth-autoconfigure是如何自动配置信息的?

主要是由TraceEnvironmentPostProcessor类当中的postProcessEnvironment方法在启动时候判断是否开启类sleuth,假如开启了就会设置LOG_DATEFORMAT_PATTERN变量

1 | public void postProcessEnvironment(ConfigurableEnvironment environment, SpringApplication application) { |

4. logback日志问题

分布式系统中,如何开素定位某个用户的请求日志?

zikpin并没有提供详细报错日志,例如是哪行报错,通过zipkin我们可以知道是链路中哪个服务报错,这时候我们可以通过traceid去日志文件当中查看详细报错信息!

但是有时候我们项目当中使用了logback.xml,日志格式里面并没有设置traceid。那到底应该如何设置呢?

我们可以参考springboot默认的日志配置,然后配置如下:

1 |

|

5. 除了Zipkin还有哪些链路跟踪

| 系统 | 定位 | 研发团队 | 支持的存储 |

|---|---|---|---|

| CAT | 实时应用监控平台 | 吴其敏老师团队(携程) 尤勇老师团队(大众点评) |

本地文件、hdfs、Mysql |

| Zipkin | 分布式追踪系统 | Mysql、Elasticsearch、Cassandra | |

| Skywalking | 分布式追踪系统,应用系统监控系统 | 2017.12进入Apache孵化器 | Elasticsearch、Mysql、Tidb、H2、Sharding Sphere |

| Pinpoint | 分布式追踪系统,应用系统监控系统 | naver团队 | Hbase |

- CAT是一个更综合性的平台,提供的监控功能最全面,国内几个大厂生产也都在使用。但研发进度及版本更新相对较慢。

- Zipkin由Twitter开源,调用链分析工具,基于spring-cloud-sleuth得到广泛使用,非常轻量,使用部署简单。

- Skywalking专注于链路和性能监控,国产开源,埋点无侵入,UI功能较强。能够加入Apache孵化器,设计思想及代码得到一定认可,后期应该也会有更多的发展空间及研发人员投入。目前使用厂商最多。版本更新较快。

- Pinpoint专注于链路和性能监控,韩国研发团队开源,埋点无侵入,UI功能较强,但毕竟是小团队,不知道会不会一直维护着,目前版本仍在更新中